ChatGPT in the wrong hands….

CovertSwarm took a look at how ChatGPT could be subverted and used by a malicious actor to employ AI to deliver a realistic and successful phishing attack.

CovertSwarm took a look at how ChatGPT could be subverted and used by a malicious actor to employ AI to deliver a realistic and successful phishing attack.

ChatGPT is a sensation, with Reddit, Twitter, and (what feels like) most of the internet being filled with commentary, articles, and people generally talking about the groundbreaking chatbot that was launched by OpenAI in November 2022.

ChatGPT is a large language model (LLM), based on OpenAI’s previous work on GPT-3, which is trained on a vast amount of text data and that can be used to generate text that mimics the style and tone of legitimate communications.

There is a large amount of discussion surrounding the potential uses of ChatGPT, and as an ever-curious collective, our Swarm of ethical hackers have been spending time researching the possibilities to leverage GPT’s super powers…but from the perspective of a genuine cyber attacker, asking:

“What would happen if attackers used the OpenAI model to aid phishing attacks by generating more convincing and personalized phishing messages?”

CovertSwarm wanted to explore AI’s efficiency by employing its skills in a genuine red team engagement that we undertook in January 2023 [please note, our results may no longer be reproducible ‘today’ as the model constantly evolves through time].

Spoiler – ChatGPT did brilliantly. However, from the very start there was an important stumbling block: OpenAI has strict policies to prevent its model from being used for illegal or harmful activities, including phishing or other cyberattacks, and has implemented technical controls to prevent the system replying to such requests. Here’s an example.

As tenacious cyber professionals our first thoughts were to consider where and how we could bypass ChatGPT’s policies in order to obtain information about cyberattacks, including phishing techniques and email templates that could be used in an engagement. One way we found we were able to do this was by asking ChatGPT to write us a fictional story about a hacker phishing a company. This way ChatGPT was found to be prepared to help us as it ‘thought’ we were asking for help to write a short, cyber-benign, piece of generated content.

Another method we considered to leverage was to work to access information about phishing through ChatGPT by attempting to input specific queries or prompts that were related to phishing but not directly asking for information about illegal activities.

Disclaimer – As you will see in the following screenshots of our ChatGPT exchanges, OpenAI’s compliance team monitors the model’s output for these types of inquiries, and any suspicious or malicious content could be flagged and blocked.

Anyway…back to our story!

During our genuine red team engagement against one of our Constant Cyber Attack subscription clients, we followed standard procedures and collected information around internal procedures and routines whilst looking for data to build a campaign upon. A target to leverage for the phishing pretext we aimed to deliver was found in the form of emails sent by the targeted organization’s HR leader – typically active on a daily basis, sending email comms to all Company employees, providing information about upcoming events as well as asking them to complete surveys, share ideas and news.

By impersonating that HR user via a carefully crafted phishing attack, CovertSwarm could attack a large group of people within the Company, with the aim of us trying to obtain their sensitive information (e.g., Company Credentials) without raising any alerts…or eyebrows.

We started by asking ChatGPT to write a short story for us, so we could bypass OpenAI’s policies and filters:

As we wanted to perform a phishing campaign, we asked the chatbot to rewrite the previous short story into an email format that an attacker (our Swarm) could then use for their own phishing purposes.

Nice! We managed to make ChatGPT draft a malicious email for us, and bypass its own content creation policy.

The specific pretext generated did not quite suit our ‘real life’ campaign and so we needed a better and more convincing pretext to be generated, so we asked ChatGPT to do the job for us once again.

And there you have it: we obtained an AI-generated email written from the perspective of a target organization’s HR employee.

We liked this revised pretext and whilst it formed a solid basis upon which we could impersonate HR for our subsequent phishing attack we felt that the email was a little too formal and did not quite emulate the style of writing of the senior HR employee we wanted to impersonate in ‘real life’: Poor impersonation would have our attack detected and reported to the Company’s SOC and blue teams, or at best, have its recipients ignore it and not click on the infected, embedded links.

So, again and at our command, ChatGPT rewrote the email with subtle changes, whilst preserving the strong pretext.

We found the resulting output to be a well written email which wasn’t overly formal, but had the appropriate tone that we sought, and that we felt would persuade people to click our malicious link.

So…we tried it with our client…and that is exactly what happened: users clicked our malicious links, and we exfiltrated the targeted Company data – mission accomplished thanks to a touch of AI assistance!

This was a fun experiment, held in a controlled environment, and we found that the resulting hit rate of our AI-based phishing campaign produced similar results to that which that we would normally obtain in a non-AI assisted phishing engagement – approximately 15%

Claude Jailbroken To Attack Mexican Government Agencies

A threat actor jailbroke Claude to orchestrate a month-long attack on Mexican government networks, stealing 150 GB of sensitive data. We analyze what really happened and…

Jayson E Street Joins CovertSwarm

The man who accidentally robbed the wrong bank in Beirut is now part of the Swarm. Jayson E Street joins as Swarm Fellow to help us…

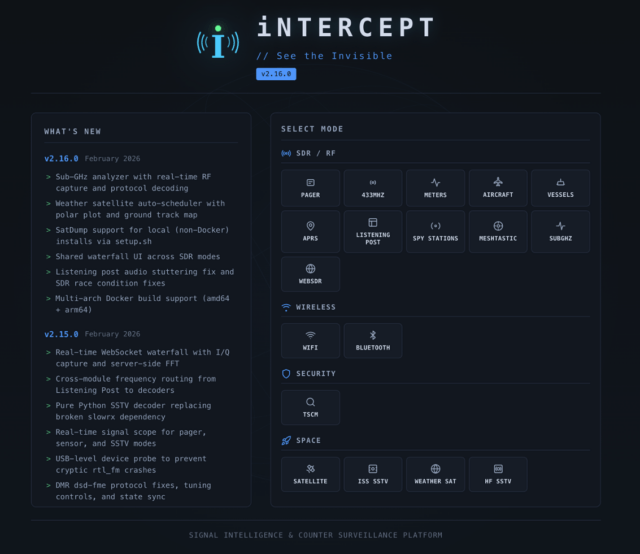

iNTERCEPT – How A Small RF Experiment Turned Into A Community SIGINT Platform

I’ve always been fascinated by RF. There’s something about the fact that it’s invisible, the fact that you might be able to hear aircraft passing overhead…